Woman Warns Of How Company Used AI Deepfake Of Her For Erectile Dysfunction Pills Ad

Looks so real.

Here's your reminder to not immediately believe everything you see on the Internet

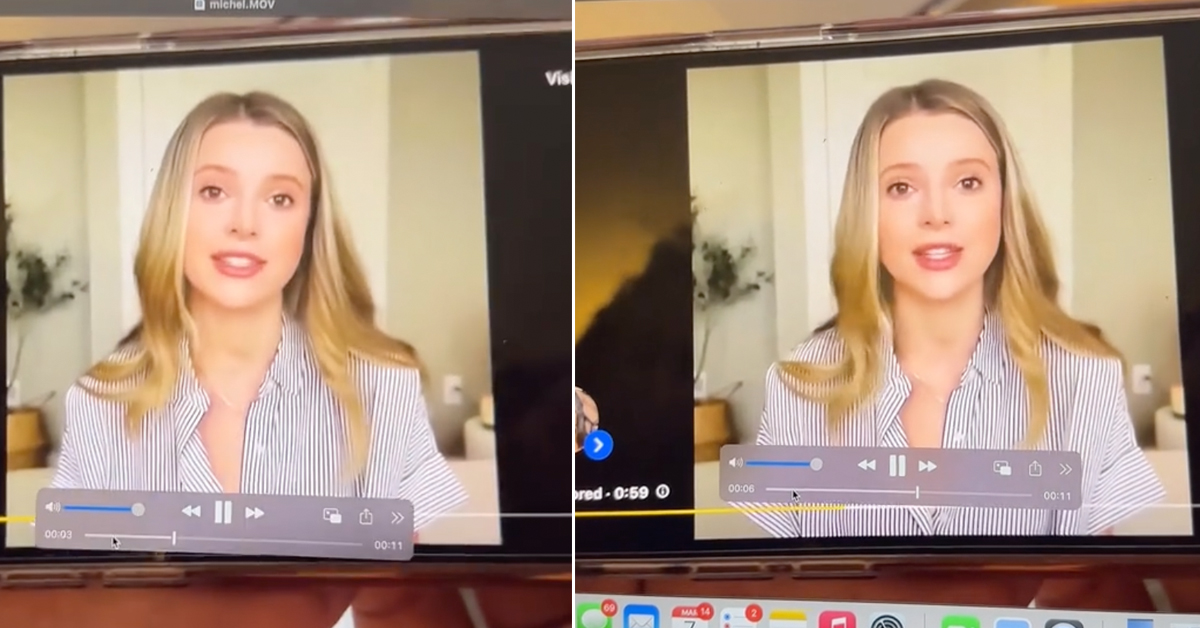

A woman recently shared how a company allegedly stole her likeness to create a deepfake advertisement promoting erectile dysfunction pills.

TikTok user Michel Janse shared that she was alerted to it by people who sent her the ad while she was away on holiday.

"They [the company] pulled footage from by far, the most vulnerable video on my channel, where I was sitting in my bedroom explaining very traumatic and difficult things that I had gone through in the years prior," she said.

"So this ad was of me in my bedroom, in my old apartment... wearing my clothes talking about their pill," she explained.

The AI-generated woman looks exactly like her, moving and talking in a remarkably realistic manner

"The only difference is my voice," she said.

In a snippet of the ad, the 'woman' talks about how her partner has "difficulty" maintaining an erection.

Janse played the video for a few seconds before cutting back to herself on camera.

"I actually think it's super important to talk about because we are now entering this era of living our lives online to where we need to question everything we see. Someone that you know could be in a video saying something to you, looks exactly like them and it could be completely fabricated," she said.

"I honestly don't know how we as a society can be like more discerning as to being able to tell what is real and what is fake 'cause it's gonna continue to get more and more realistic and accurate every time."

"The Internet is changing... fast," she added.

AI-created generated videos have become more prevalent in recent years. At the moment, there is little regulation, leading to concerns about misuse and a loss of trust in visual media.

Earlier this year, explicit deepfakes of Taylor Swift circulated on the Internet, forcing X to block searches for the singer's name. However, people were still able to find plenty of the AI-generated images under the Media tab.

Recently, Alibaba Group achieved a milestone by effortlessly turning still images into animated character videos using a new type of AI.

The lack of regulations around deepfakes poses many concerns, such as misinformation and erosion of trust in visual media altogether.

If viewers can't be sure what's real and what's fake, they may become sceptical of everything they see.

In Janse's case, deepfakes could also be used to damage someone's reputation by fabricating actions or statements they didn't make.

To tackle this problem, tech companies are developing tools to detect deepfakes, and some governments are considering setting laws in place.